Welcome to the magical world of concurrency and parallelism.

If you have read about operating systems, you may be familiar with the term “Thread”. The simplest definition of a thread is: “A set of CPU instructions that can be executed independently.”

While this may sound straightforward, threads can be sophisticated and complex for many to understand. Therefore, let’s start by discussing about a process first..

Process - The running code.

Imagine you have written a program hello_world.cpp that prints "Hello World" on the screen.

Up until now, it’s just a flat file containing a set of instructions to be executed. When you want to run this program, the instructions will be parsed, and a Process Control Block (PCB) will be initialized for it. The operating system (OS) will allocate memory in the form of a stack and heap for the program, and then it will start executing.

The code inside hello_world.cpp looks like this:

1 |

|

The main() function above can be referred to as the main thread. As you may have heard or read, the above is an example of a single-threaded application. It represents the simplest form of a single-threaded process.

When a process is created, at least one thread is always created, and it’s called the main thread.

It is often said that a thread is a lightweight process.

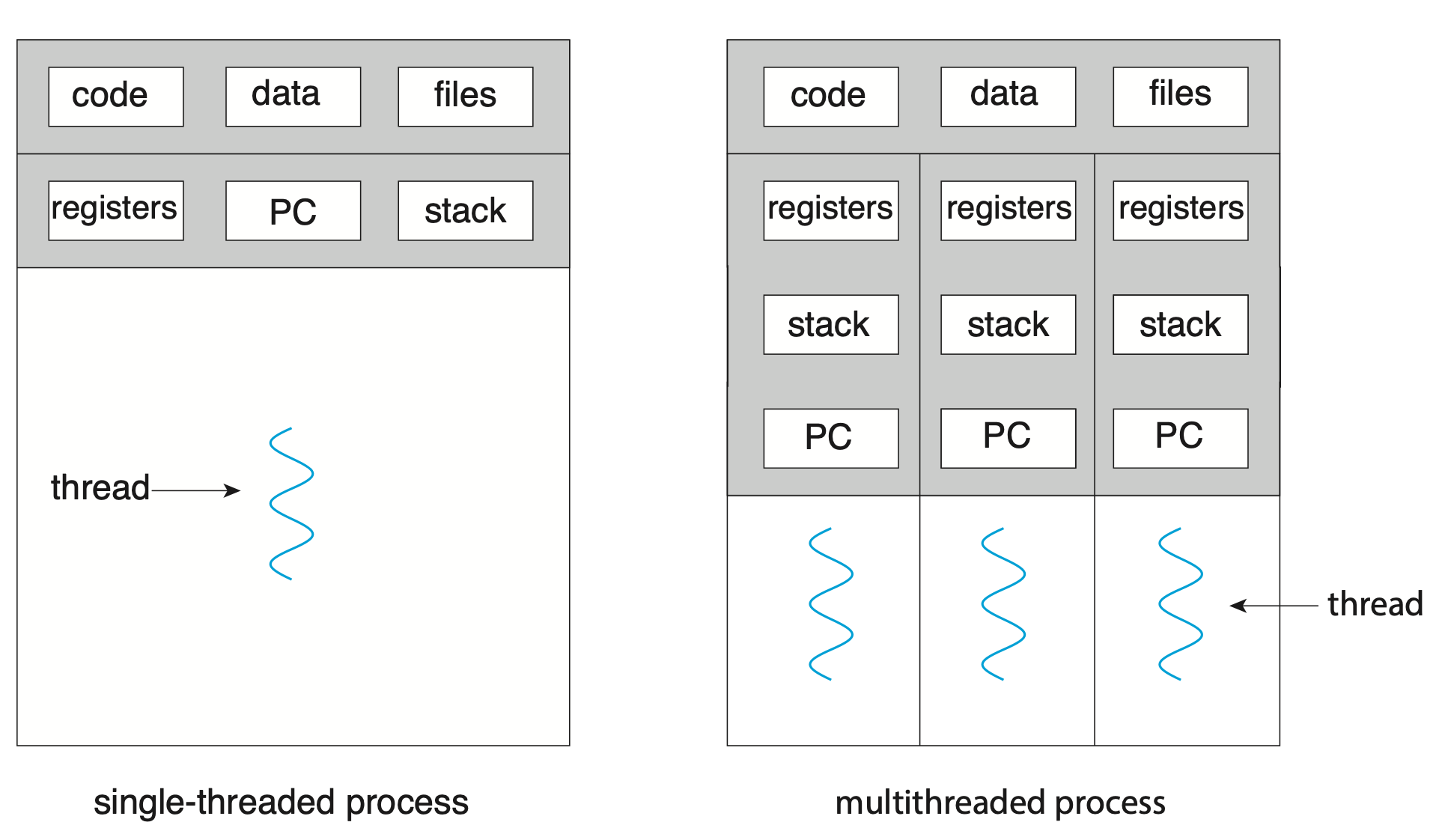

When a process is created, it is allocated a host of resources such as the page table, shared libraries, communication sockets, opened files, inter-process communication (IPC), heap memory, and a lot more…

When a thread is created (apart from the main thread), it shares the resources of the parent process, and the OS does not have to allocate or deallocate resources particularly for a thread.

However, threads have their separate stack memory, and a Thread Control Block (TCB) is always created to store the state of each thread. The TCB is used during the context switching of threads when a thread is preempted and later resumed.

Creating a thread in C++

Let’s take a look at a basic C++ program using threads:

1 |

|

Since C++ 11, there’s a Thread library added to the language and folks do not have to use the PThread separately. In the above code, I have added comments to make things clear. Special attention should be given to the line t1.join(); which is essentially holding on the main() so that it should terminate after the thread has completed it’s execution!

Why we need threads?

Threads enable us to achieve concurrency.

Concurrency does not necessarily mean that a program will be faster. While threads help us achieve progression, speed is attained through parallelization.

Let me explain this statement with an example.

Understanding Concurrency

Suppose you have three roommates who love reading books. However, at any given time, they can only have a single book that they must finish reading within a specific duration.

All three roommates agree to follow a round-robin pattern for reading the book.

Person A starts reading and continues for an hour before taking a break. Person A notes down the page number and line number in a notebook or piece of paper, allowing them to resume from where they left off during the next reading session.

Now it’s time for Person B to read the book while Person A takes a break, but Person C still has to wait. Person B also reads for an hour, notes down the page and line numbers, and then hands over the book to Person C.

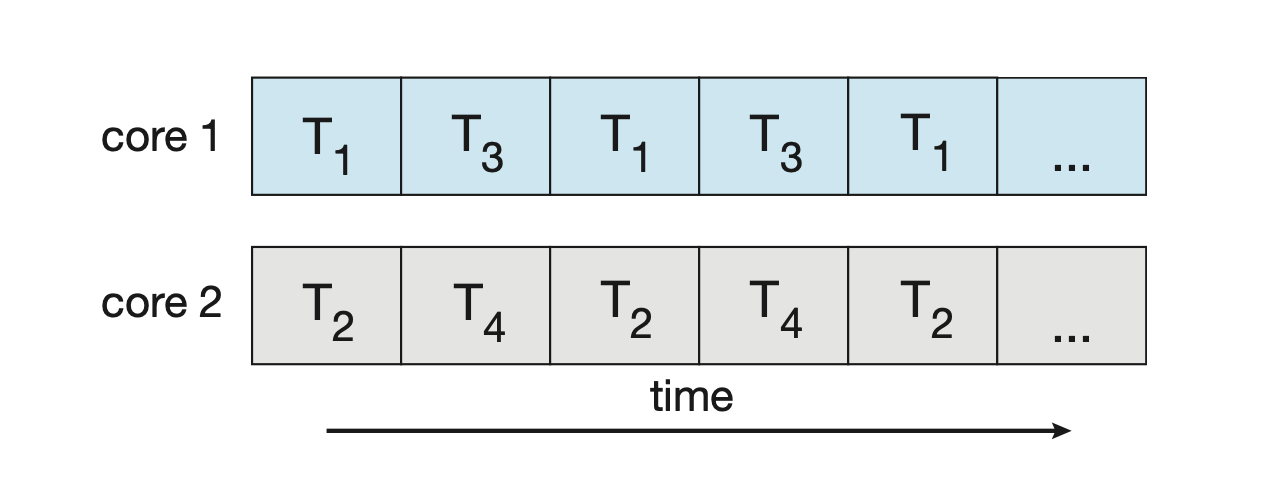

As you can see, when resources are limited (in this case, the book represents a single CPU), concurrency is slower than what we typically perceive. The notebook or piece of paper where the roommates record their reading progress is analogous to the Thread Control Block, and the process of switching the book from Person A to Person C is called context-switching.

Due to context-switching, concurrency is slower than parallelism.

Understanding Parallelism

Now let’s explore the concept of parallelization, where we increase the number of resources to avoid context-switching.

Continuing with the previous example, let’s increase the number of books to three, allowing the roommates to read independently without waiting for the book. This approach enables faster completion of the reading based on each individual’s reading capacity, with no need for anyone to wait.

Parallelism surpasses concurrency in terms of efficiency.

However, there’s a caveat. We still have limited resources. Consider a college library that can only provide a limited number of books for a given subject.

For instance, if we have 100 students, we may have a maximum of 20 books on a particular title. Even though we achieve parallelism for 20 students, the remaining 80 students are still waiting.

Moreover, it is not feasible for a single student to read a book all day without taking a break. While a student is on a break from reading, we cannot keep the book assigned to them, as it would waste resources while others are waiting.

When to Use Multithreading?

Broadly speaking, we can categorize the usage of multiple threads in the following scenarios:

- When you want to avoid waiting and want to execute code while another process/thread is waiting.

- When you want to distribute the workload across multiple CPU cores and multiple CPUs.

Modern Process Design

In modern systems, a hybrid approach is often deployed, combining parallelism with concurrency to save resources and achieve faster results.

- Concurrency occurs when threads (from the same or different processes) share the same CPU or CPU core for overlapping and non-overlapping tasks.

- Parallelism occurs when threads (from the same or different processes) use different CPUs for non-overlapping tasks. This hybrid model allows systems to efficiently utilize all available cores while maintaining responsiveness, making it ideal for both high-throughput and interactive applications.

Additionally you can select or influence which core(s) a thread or process runs on. This is known as CPU affinity or processor affinity

CPU Affinity

It is the ability to bind a thread or process to a specific core (or set of cores). This is particularly useful in performance-critical applications, real-time systems, or high-performance computing where you want to:

- Reduce context switching

- Improve cache locality

- Avoid CPU contention

⚠️ Just to mention that over use or abuse of CPU affinity can give opposite unexpected results! Affinity hints may be ignored, the OS scheduler has the ultimate say, though many OS respect affinity settings if set explicitly.

Enough of theory! Show me the code.

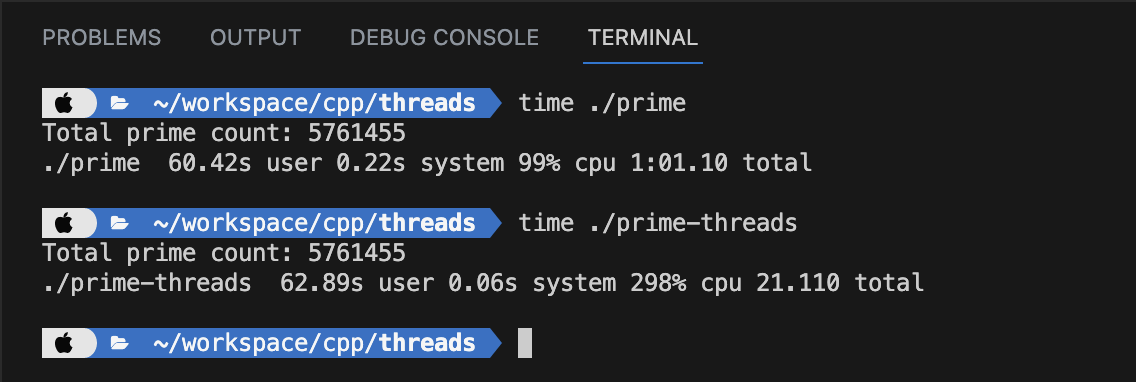

Here’s an example of a C++ multithreading code to count the total number of prime numbers between 0 and 99,999,999

We have used 4 threads to divide the range into 4 parts, count the summation of prime numbers in individual sub-ranges, and then sum up the individual range counts for the final count of prime numbers.

1 | /* |

The same program can be implemented in Go as follows:

1 | package main |

While the Go code appears simpler than the C++ code, it’s worth noting that C++ has made significant advancements, particularly since C++11, to simplify many aspects of code. C++ is continuously working toward making code more intuitive.

Thank You

I hope you have enjoyed reading it and have gained new insights. I am also approaching concurrency with a novice mindset, and this serves as my notes during my journey of learning and understanding concurrency. I encourage you to provide your feedback. If you find any mistakes, please feel free to correct me, or if you have any suggestions, kindly share them in the comments.

Keep building. 🔧💡